Recursion Stinks

see also: Recursion Stinks

This is a code-heavy post. Code is presented as images to preserve formatting and highlighting, with captions linked to plain text files.

I always ask software engineering interview candidates to implement a factorial function, then (assuming factorial goes well) a Fibonacci function. These are admittedly trite exercises that, for senior engineers, serve as quick warm-ups. Junior engineers didn’t used to have much trouble with them, either; but over the past ~10 years, a strange trend has arisen whereby new college grads consistently go straight for recursive implementations. They all seem to do this. It’s uncanny. (Are entry-level software engineers all reading the same prep book, or watching the same YouTube channel or something?) Recursion usually makes for terrible solutions, and I’d like to show you some reasons why.

🪤 Despite The Problem with Big O, this post bears a light sprinkling of big-O expressions. It’s fine to skip right past them if you want. Moreover, we’ll distinguish space and time complexity using ‘s’ and ‘t’ subscripts; for example, Oₛ(N)Oₜ(2ᴺ) indicates that an algorithm completes in linear space and exponential time. But again, it’s fine to ignore all that, unless you’re a glutton for undergrad-level Computer Science.

Factorial

First, let’s look at a recursive factorial function. We’ll use Rust, but (except where noted) the same principles hold for other languages.

The first thing that should concern you about this code is integer overflow. Factorials get big fast. Integer overflow is easy enough to avoid (potentially at the expense of performance) by switching to a growable return type like num::BigUint. Some languages, like Python, even use growable integers by default.

The bigger problem is stack overflow. Most programming languages (with notable exceptions like Golang, as explained by Dave Cheney) allow each thread only a finite amount of stack space. Each recursive call takes another chunk out of that space. When the stack space is gone, the program crashes, and it crashes ugly.

![$ cargo run --quiet --release thread 'main' has overflowed its stack fatal runtime error: stack overflow [1] 9275 IOT instruction cargo run --quiet --release $ cargo run --quiet --release thread 'main' has overflowed its stack fatal runtime error: stack overflow [1] 9275 IOT instruction cargo run --quiet --release](https://substackcdn.com/image/fetch/$s_!0x2T!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2Fa11a2916-f063-4fb1-bb9a-10442b0aae63_2052x434.png)

Some languages (like Scheme, Haskell, and Scala) support Tail Call Elision (TCE), silently optimizing certain functions so they don’t overflow the stack. The implementation above is not amenable to this optimization, and most candidates who jump straight to recursive solutions (or at least, the ones I’ve asked) can’t even explain what a “tail call” is. (The point isn’t that engineers should know about TCE, but that we shouldn’t write code that crashes in the absence of particular optimizations.)

Linear (Oₛ(1)) recursion is exactly the sort of thing that works fine in test code, but blows up in production. Do not recurse in production code unless you have a good reason. Figuring out whether my team will have to explain things like this to new hires is part of the reason I still ask these clichéd, boring questions.

The obvious implementation I would expect most juniors to write—and I swear, they used to do so—is a for-loop:

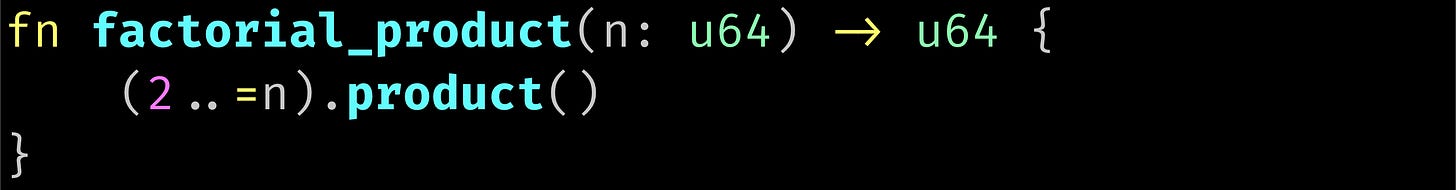

A plain old loop is absolutely fine for something like factorial. A more sophisticated approach would be to delegate most of the work to a standard library function:

Yet another option is a look-up table of precomputed values:

![const FACTORIALS: [u64; 21] = [ 1, 1, 2, 6, 24, 120, 720, 5040, 40320, 362880, 3628800, 39916800, 479001600, 6227020800, 87178291200, 1307674368000, 20922789888000, 355687428096000, 6402373705728000, 121645100408832000, 2432902008176640000, ]; fn factorial_table(n: u64) -> u64 { FACTORIALS[n as usize] } const FACTORIALS: [u64; 21] = [ 1, 1, 2, 6, 24, 120, 720, 5040, 40320, 362880, 3628800, 39916800, 479001600, 6227020800, 87178291200, 1307674368000, 20922789888000, 355687428096000, 6402373705728000, 121645100408832000, 2432902008176640000, ]; fn factorial_table(n: u64) -> u64 { FACTORIALS[n as usize] }](https://substackcdn.com/image/fetch/$s_!Lcn4!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2Fe1e6460c-c8bf-48d3-9cd1-999cedce550f_3072x1456.png)

None of these solutions is liable to blow the call stack, and all of them have better complexity than the recursive solution. These principles aren’t specific to factorial, either: If you want to be a professional computer programmer, you have to be able to write a for-loop. Don’t get any fancier than that unless you can explain your reasons for doing so.

Fibonacci

The reason I ask Fibonacci, even if someone nails factorial, is that efficient implementation requires a translation from the problem domain to the solution domain. That’s something a candidate will do easily if (and only if) they’re already in the habit.

The Fibonacci sequence, as a mathematical object, is defined recursively:

fib(0) = 0

fib(1) = 1

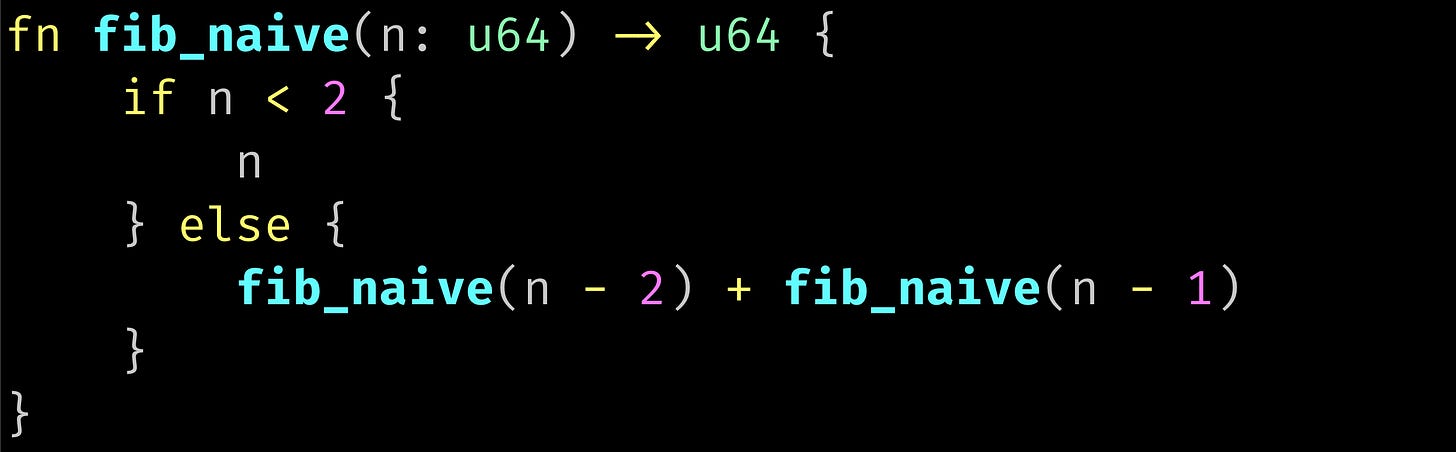

fib(n) = fib(n - 2) + fib(n - 1)A naive implementation looks like this:

Aside from the overflow issues we already discussed in the context of factorials, this Fibonacci function has a severe scalability problem in the form of exponential time complexity. This is another great example of something that works fine in tests, but can cause production outages in the real world. Exponential isn’t quite as bad as factorial, but it’s bad enough to ruin your day.

![fn main() { for n in [10, 20, 30, 40, 50, 60] { let now = std::time::Instant::now(); let val = fib_naive(n); let secs = now.elapsed().as_secs_f64(); println!("{secs:20.8}s fib({n}) = {val}"); } } fn main() { for n in [10, 20, 30, 40, 50, 60] { let now = std::time::Instant::now(); let val = fib_naive(n); let secs = now.elapsed().as_secs_f64(); println!("{secs:20.8}s fib({n}) = {val}"); } }](https://substackcdn.com/image/fetch/$s_!Ybou!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2F45912958-aeb4-465c-879a-2b2b2338e7d1_3016x1070.png)

The complexity here isn’t due to recursion per se, but to a misunderstanding of how we should translate inductive definitions like fib(n) into computer programs where time and space actually matter.

So many good ideas are never heard from again once they embark in a voyage on the semantic gulf.

—Alan Perlis, Epigrams in Programming

The first step is to identify the state that must be passed from one iteration to the next; in other words, the signature of the recursive function. For Fibonacci, the state is the most recent two results, and the number of iterations remaining. Here’s a linear complexity (tail-)recursive Fibonacci implementation:

When candidates who’ve implemented the naive solution above are asked to address the exponential complexity, they always—and I mean, always—try adding a cache, effectively memoizing the function. That gets us back down to linear space and time (Oₛ(N)Oₜ(N)), but a simple loop would run in constant space and linear time:

Higher Order Functions

Students of Functional Programming spend their first year learning to recurse, and their second year learning not to.

—Unknown

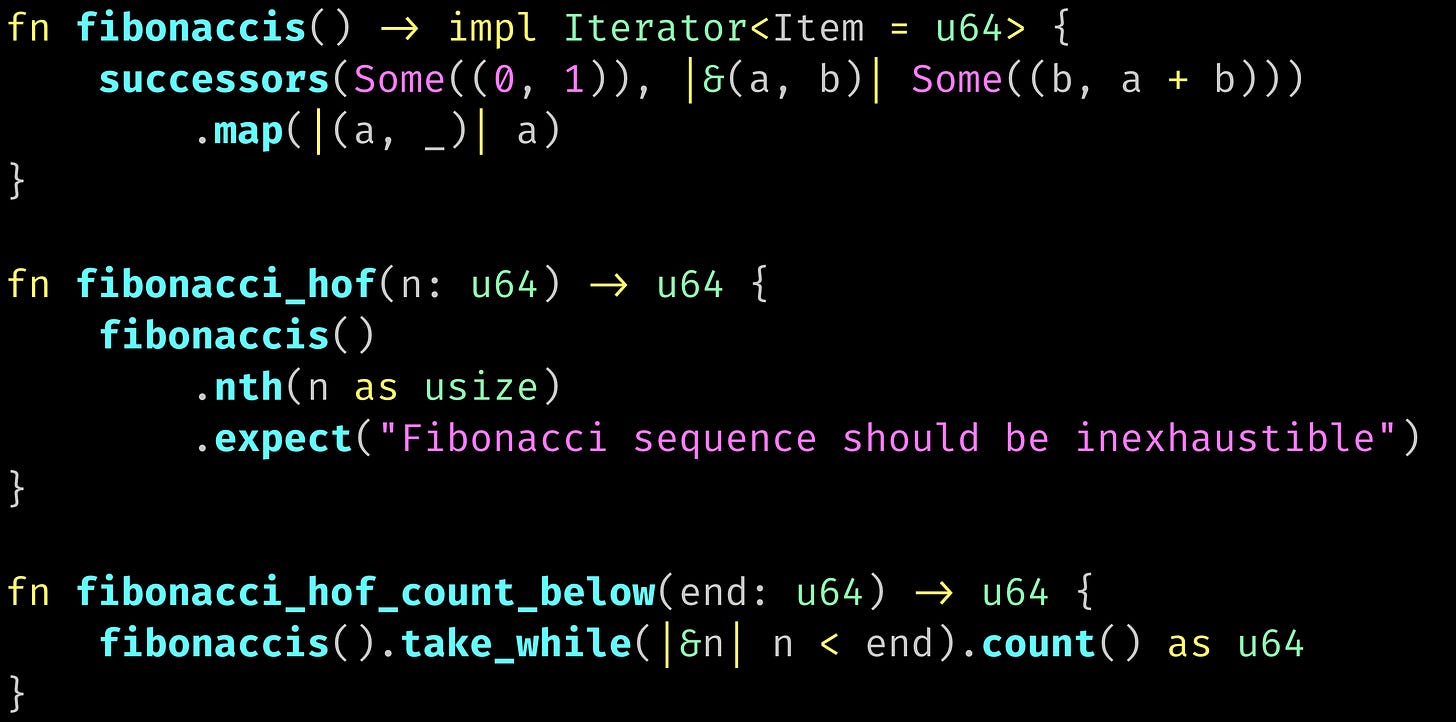

Much as factorial can be expressed as the product of a range of integers, a more sophisticated Fibonacci implementation might use a Higher Order Function (HOF) like successors:

Using HOFs instead of hand-rolled loops is a pretty strong indicator that this ain’t the author’s first rodeo. (Similar HOFs exist in most languages, or else can be easily implemented using iterators or generator functions. For example, what Rust calls ‘successors,’ Haskell and Clojure both call ‘iterate.’)

Higher order functions are often even better than loops, for two main reasons:

They express your intent directly. A loop that processes elements of one array and adds them to another is rarely as clear as a call to map and/or filter (or an equivalent comprehension in Haskell, Scala, or Python). Such direct expression of intent makes the code easier to work with for both humans and compilers.

In some cases for_each may also be faster than a loop, because it will use internal iteration on adapters like Chain.

—Trait std::iter::IteratorThey’re more composable, enabling you to share low-level functionality across functions.

Suppose we want to know how many Fibonacci numbers are below a given value. If we start from the ‘fibonacci_loop’ function above, there’s no good way to factor out the loop logic itself. We’re stuck writing a completely new, though conceptually very similar loop.

With the HOF based version, though, we can easily factor out not only the iteration, but the iteration state; in this case, keeping the value ‘b’ out of the caller’s scope.

Some technical leaders take the preference for HOFs to the extreme. Others positively fetishize raw loops. My own experience is that HOFs are almost always the best option. They scale better than loops, both within individual programs, and as library features. They also tend to go hand in hand with short variable names, and let you mentally separate mechanics from semantics; e.g., separating loop logic (I have a list, and want the Nth item) from the business domain (I’m implementing a Dutch auction, and need the second-highest bid).

But, the glue required to match HOF callback signatures—for example, in our HOF-based Fibonacci functions above, the Some() and .expect() calls—is a pain for small examples. It’s often best to start with a short hand-written loop, but replace it with a HOF at the very first sign of complexity.

OK, fine, recursion is occasionally useful

When, if ever, should you use recursion? Here’s a fancy Fibonacci function I wrote a long time ago that recurses instead of looping. I felt OK writing that because it runs in logarithmic, rather than linear, time and space (Oₛ(log N)Oₜ(log N)). Recursion is also a convenient way to get a data stack for free, if you’re willing to abuse the call stack to hold data; for example, when traversing a binary tree. Even then, it’s usually better to use an explicit stack.

💡 The only difference between depth-first and breadth-first search is whether newly encountered nodes are added to a stack (LIFO) or a queue (FIFO).

If you know what you’re doing and why, then it’s OK for recursion to be one of the tools in your toolbox. It is not, however, a good idea to recurse where a loop or HOF would suffice merely because recursion is what the cool kids seem to be doing.