Variables

This post uses inline code blocks for very short code snippets, and images with alt text and links to GitHub for longer snippets. Feedback is welcome.

New programmers are taught about variables on day 1, but only through vague analogies like “boxes you can put things in.” Even experienced programmers sometimes have no more than an intuitive understanding of what the word “variable” means. Let’s nerd out a little this week, and take a deeper look at what a variable is.

Mathematics

In mathematics, a variable is a name bound to a value. A statement like x = 5 binds the name x to the value 5. A “name” is usually a letter, word, or short phrase. Sometimes somebody gets wacky and declares a variable whose name is a shape or a cartoon character or something. A “value” can be any timeless concept, like the number 5 or the color blue.

A variable may represent different values in different situations. For example, we can define functions that map argument values to result values, using variables called parameters to represent arguments abstractly. In the function definition f(x) = x + 1, the variable x is a parameter. In the function calls f(3) and f(5), x is bound to the arguments 3 and 5, respectively.

Values never, ever change. A value is forever. Variables that always represent the same value, like π for the ratio of a circle’s circumference to its diameter, are sometimes called constants. In fact, if you call π a variable instead of a constant, you’re likely to annoy a lot of pedants; but in your heart of hearts, know that symbolic constants like π are just variables that don’t vary.

Rose is a rose is a rose is a rose.

—Gertrude Stein, Sacred Emily

Code

In computer programming, a variable is a name bound to an object, which in turn represents a value. This extra level of indirection can be tough to explain, which is why it’s rarely taught to newcomers. Nevertheless, these concepts are fundamental to software engineering, and you ought to understand them if you do this stuff professionally.

An “object” is a region of memory that represents a value. Computer “memory” is a big list of numbers, and a “region” is a contiguous sublist of memory. Modern computers can store billions of small numbers in their Random Access Memory (RAM) all at the same time. Of course, computer software needs to represent lots of different kinds of values, not only small numbers. That’s where types come in.

Types

A “type” is a system for storing values in memory, or interpreting values previously stored there. A region of computer memory (i.e., a list of numbers) on its own isn’t very useful, because you can’t tell what value it represents unless you also know its type.

💡Types (sometimes called “classes”) are often explained to new programmers as being blueprints for objects. That’s not a bad analogy, but it breaks down quickly as you dig deeper into type theory. One rarely hears about “blueprint contravariance” or “higher kinded blueprints.”

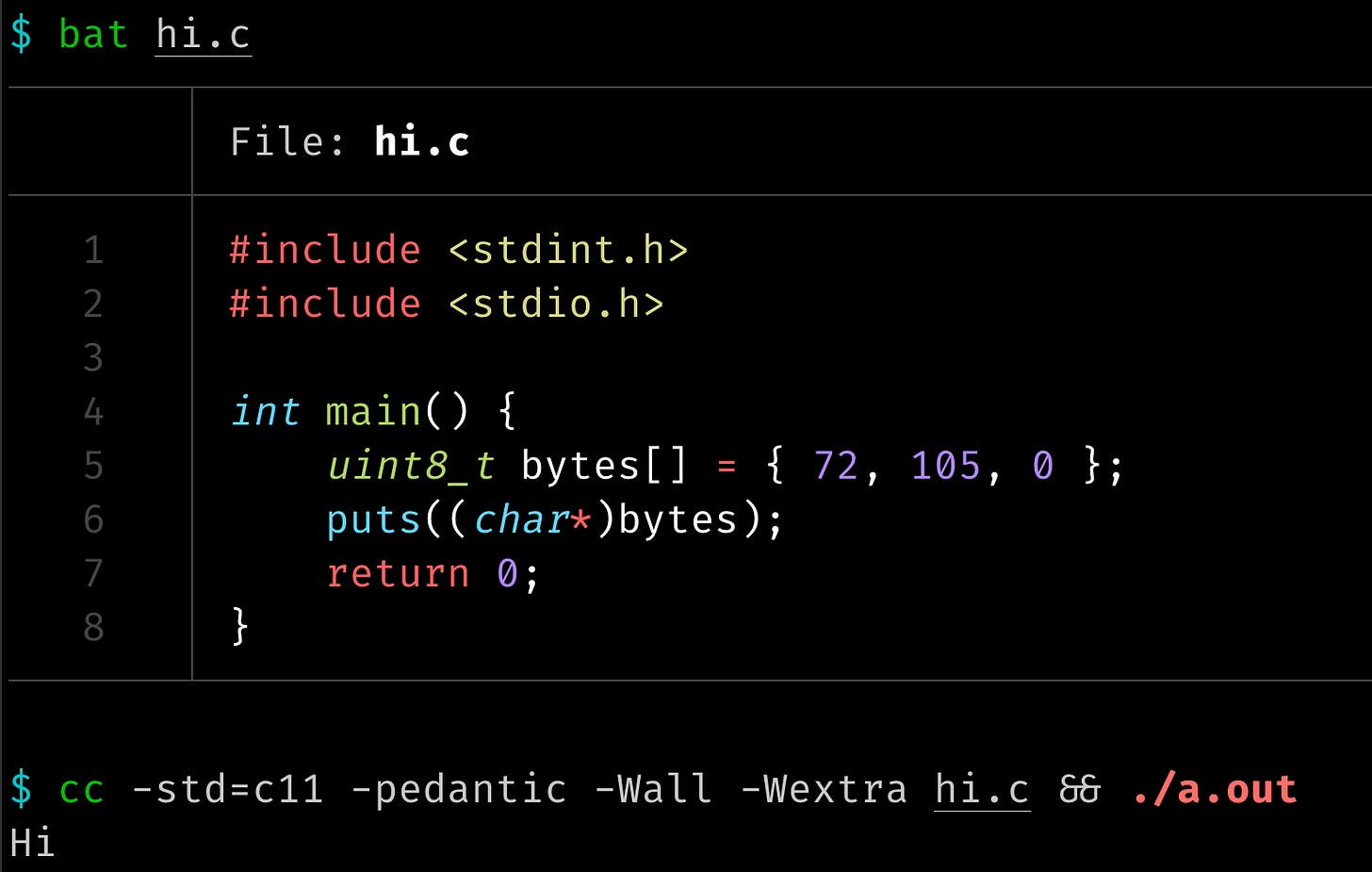

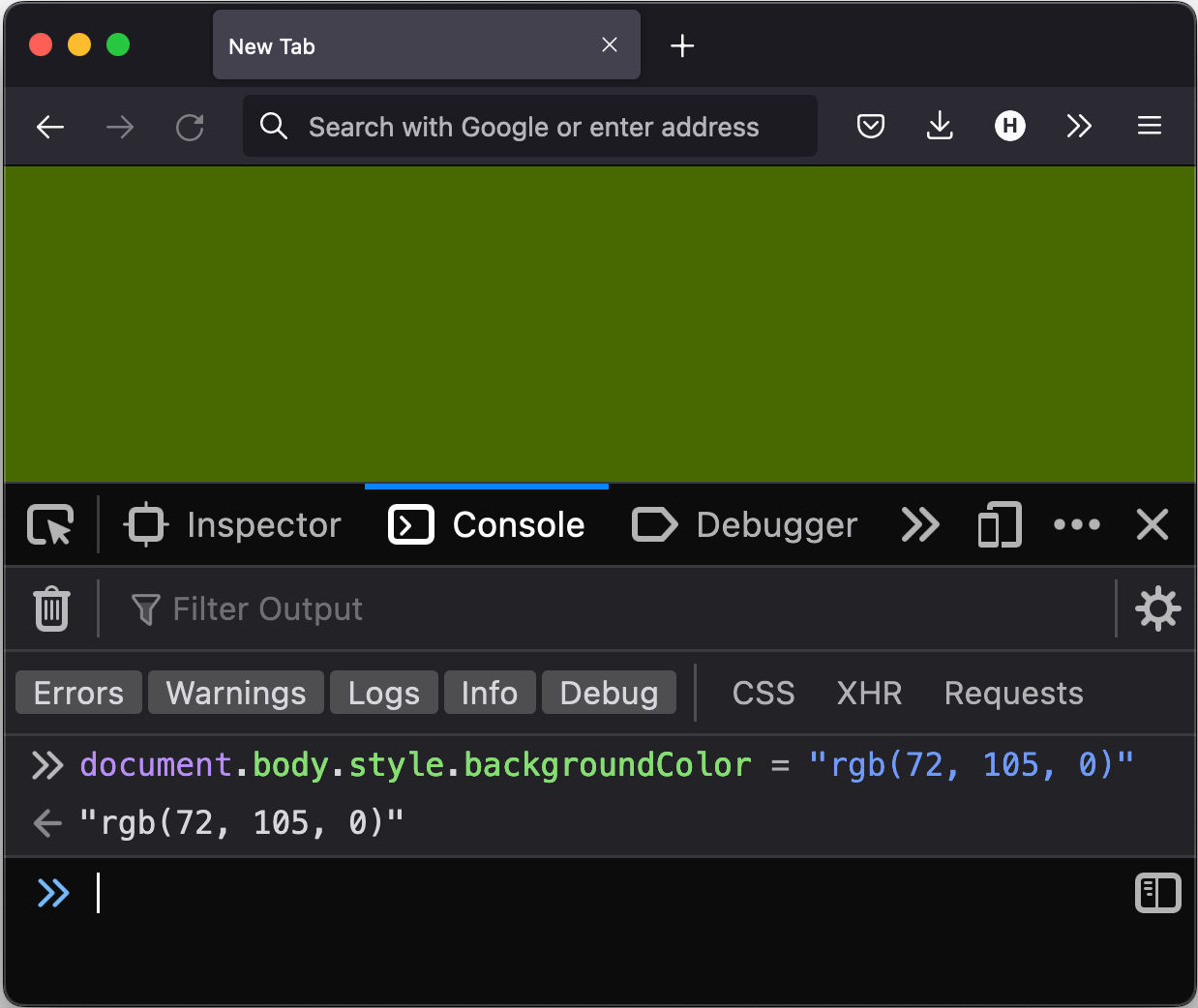

Types for representing common kinds of values, such as colors or strings of text, often use standard encodings to map values into numbers. For example, the list of numbers [72, 105, 0] represents an ugly shade of green as an RGB encoded color, but the word Hi when interpreted as UTF-8 encoded text.

None of this is specific to any single programming language, but the C standard offers a pretty good definition of object:

object

region of data storage in the execution environment, the contents of which can represent values.Note 1 to entry: When referenced, an object can be interpreted as having a particular type.

— N2596 (working draft), 3.15

Static vs. dynamic types

You might think that when a program stores a value in memory (i.e., in an object), it also has to store some type information, so that it doesn’t forget what kind of value was stored. Types used that way are described as dynamic.

Some languages also support static types, which are tracked through analysis of the source code. This is arguably the best of all possible worlds. We get the safety of knowing whether we got our types straight before the program even runs (!), and without the performance overhead of storing and retrieving dynamic type information.

The chief shortcoming of static types is that sometimes, we won’t know what kinds of values we need until the program is already running. For that reason, even “statically typed” languages also have to support dynamic types. (C in particular does so grudgingly, but most newer languages are less judgy about it.)

Variables having static vs. dynamic types are sometimes said to be early or late binding, respectively.

Object footprints

Part of the definition of an object is that its region is contiguous, meaning all the numbers are stored next to each other in memory. The contiguous region is called the object’s footprint. There are two caveats you should be aware of: The footprint may contain padding that does not store any part of the object’s value, and pointers to other regions that do.

Padding

Indexes into memory are called addresses, and they refer to individual bytes, each big enough to store one small number; typically, an integer from 0 through 255. Computers can work with some types efficiently only when their values are stored at addresses divisible by certain numbers (usually 2, 4, or 8). Strange but true. Such types are said to have alignment requirements. So, sometimes an object’s footprint will include meaningless bytes as “padding,” merely so that the next meaningful part of the value lives at a suitably aligned address.

Pointers

A “pointer” is an object whose value is a memory address. A complex object’s footprint might include pointers so that it can share data with other objects, or so that data can live in a specific part of memory; for example, storing huge chunks of data on the heap (which has plenty of room) even when the object’s footprint lives on the stack (which does not).

Move semantics

It’s sometimes useful to move objects around in memory. For example, maybe we’ve stored the color blue at address 1024, but now we want to store it at address 512 instead. Computer hardware doesn’t really support move semantics, so moves are usually implemented as copies. Move semantics are yet another reason for an object’s footprint to contain pointers: When we move an object, it’s usually cheaper to copy a pointer than to copy all the data the pointer points to.

Garbage collected languages move objects all the time. When you hear about mark and sweep GC, remember that “sweep” is a euphemism for “copy.” This is why it’s so hard to get an object’s address (or any unique identifier) in most GC languages: The language designers don’t want you to think of the object as living at any particular address, because it might get moved.

C++ has an, um, interesting approach to this: Rather than moving the object, you move the value from one object to another. The original object is “move copied,” and left in some technically valid but unpredictable state. The introduction of move semantics to C++ in 2011 was a game changer in terms of performance and expressiveness, but it also added one heck of a foot gun to a language already rich in weapons for shooting oneself in the foot.

Exotic variables

Reference types; or, “Dammit, Java!”

If the value we want to represent happens to be a small number, then it’s easy to imagine how we’d store it in memory, which is (after all) a list of numbers. Most programming languages even include built-in types (sometimes called primitive or intrinsic types) for storing such values. User Defined Types (UDTs), on the other hand, get different levels of support in different languages.

C++ bends over backward to give UDTs the same level of support as primitive types. The Design and Evolution of C++, penned by the language’s creator, describes how making UDTs first class entities was an overriding design goal.

At the other end of the spectrum, Java treats primitive and user-defined types completely differently. Java primitives are stored entirely within the object’s footprint, whereas UDTs live entirely outside it. The nomenclature is confusing: Java provides primitive types like int to represent small numbers, and (like all programming languages) stores them in regions of memory; but weirdly, does not consider those regions “objects.” Instead, only variables of reference types are bound to (what Java considers) objects. Ironically, variables of reference types cannot be bound directly to objects. Instead, they may be bound only to references to the actual objects.

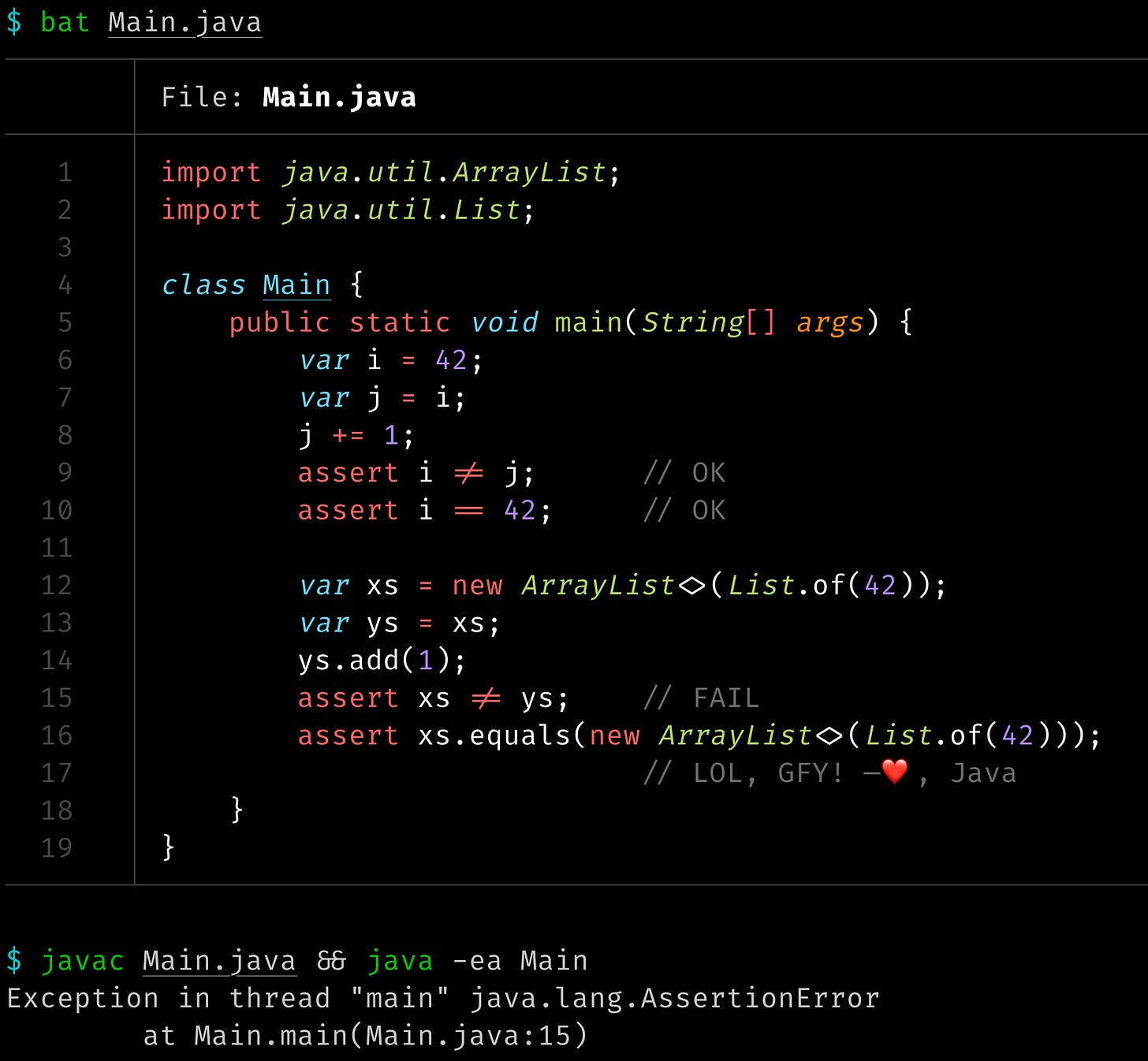

In the Java code below, copying a primitive integer and then mutating the copy has no effect on the original integer; which should make perfect sense, because why would manipulating a copy affect the original? These are variables, not voodoo dolls. Copying a variable of reference type and then manipulating it does affect the original though:

Java variables of reference types can also be bound to no object at all, in which case they’re considered null. Null values can be useful when used selectively, as is common in Golang (where null is called nil); but unlike Go, Java asininely makes all variables of non-primitive types nullable, whether you want them to be or not. Golang is proof that a GC language needn’t be burdened by this reference/null stupidity.

🪤 Several languages, including C++ and Rust as well as Java, use the term “reference” to mean a pointer that’s semantically not considered an object in its own right. In other words: Even in non-GC languages, you can’t take the address of a reference (even though it really does live at some address in memory, barring optimizations like register-only variables). If you try, you’ll only get the address of the referenced object; that is, the address that the pointer points to.

The bizarreness of Java’s object model is a historical relic of a mid-1990s fever dream through which Object Oriented Programming (OOP) was seen as some kind of pinnacle of human achievement. Java-style OOP mandates inappropriate conflation of inheritance with polymorphism, as well as other pretentious garbage that’s hard to support efficiently on outré types like, you know, integers.

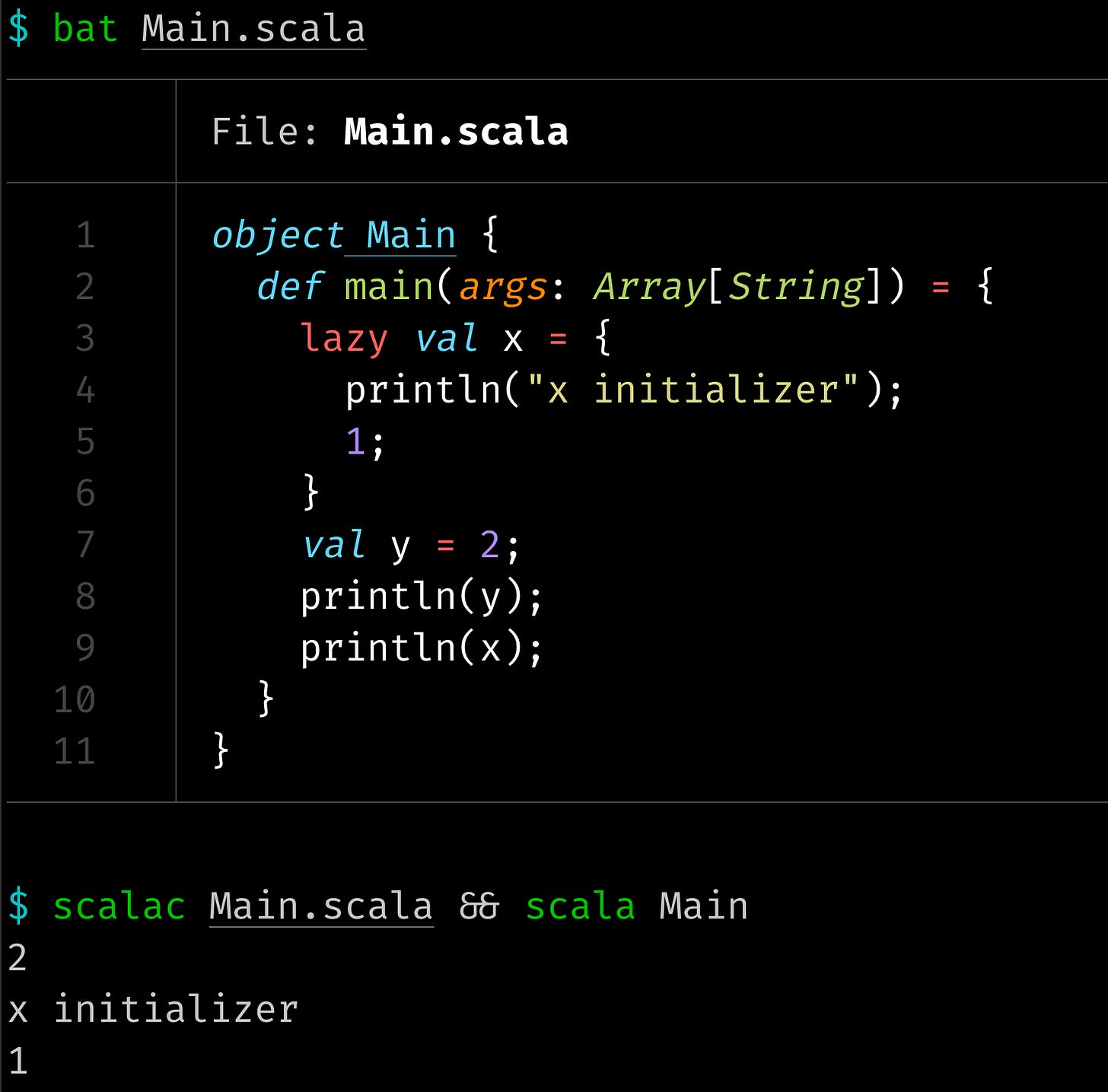

Lazy variables

Some languages support lazy variables, introducing yet another level of indirection. Rather than binding names to objects that represent values encoded by types (whew!), lazy variables bind names to objects called thunks. Thunks represent whatever computation is necessary to encode the desired value, and delay that computation until the value is actually used.

For example, in this Scala example, x is semantically bound to the value 1; but actually, it’s bound to a thunk that prints a string and then returns the value 1:

Scala papers over some of the more broken parts of Java by unifying primitive types and UDTs under a single base Any type, and blurring the distinction by making collections immutable by default. However, Scala also introduces its own academic nonsense, such as referring to objects as values in official documentation. Objects are not values, even if they’re immutable. Objects represent values, but unlike pure values, objects occupy real space and consume real resources in real computers here in the actual, non-academic world where we humans live.

Function objects

Sometimes, you’ll hear a new programmer say they’re “calling” a variable, when they mean they’re using it. That’s a good habit to break, because it gets confusing when variables can be bound to function objects—that is, objects representing functions. For example, suppose we use such a variable to register a callback in JavaScript:

const handleClick = function () {

console.log("hello");

};

button.addEventListener('click', handleClick);We’ve used handleClick here, but we haven’t called it. It won’t be called unless someone clicks the button.

Unsized types

In most type systems, all objects of a given type have the same footprint size. Even types like strings and vectors that include pointers to variable amounts of data have some fixed footprint size, typically big enough to hold the pointer and some metadata. That’s not always good enough: Sometimes, objects of a particular type should be allowed to have different sizes. In most languages, working with such types requires the programmer to be exceedingly careful.

Parameter string starts immediately after this object. Be careful to add padding after string to ensure correct alignment of subsequent dm_target_spec.

—Linux kernel,

struct dm_target_spec(dm-ioctl.h)

Rust

We need to talk about Rust for a minute, because Rust gets so much of this stuff staggeringly right.

Unified syntax for early and late binding variables

Rust unifies the syntax for static and dynamic bindings by adding a dyn keyword. I cannot overstate how cool this is. It reflects a profound understanding not only of the Computer Science (CS) involved, but of the actual day-to-day reality of software engineering. For example, suppose we have some abstract interface:

trait MyInterface {

fn do_something(&self);

}Here’s a function that accepts a reference to any object whose type implements that interface, so long as the type is known statically (i.e., at compile time):

fn f(x: &impl MyInterface) {

x.do_something();

}Now, suppose we realize that oops, we won’t know the type until runtime. Literally the only change we need is to replace impl with dyn:

fn f(x: &dyn MyInterface) {

x.do_something();

}You can even have some functions take the type statically, and others dynamically. The decision is made where the variables are defined, not where the type is. So, so cool.

Questionably sized types

In most languages, properly supporting types whose size may vary is tough. How can the language support even simple operations like copying an object, if it doesn’t know where in memory the object ends? Rust works around this issue with spectacular simplicitly: It lets you declare questionably sized (?Sized) types that support only safe operations, and trigger compile-time errors for any operation that the language cannot safely support.

Obviating padding

We discussed Rust’s flexible object layouts in Type Systems and Alignment.

Safe move semantics

Rust supports roughly the same move functionality as C++, but more efficiently, and without any foot guns. When you move an object in Rust, you’re semantically moving it out of the variable (which is now bound to the wrong region of memory), rather than leaving the variable bound to a zombie object (as in C++) or requiring the variable to go through a reference (as in Java). If you try to use a moved-from variable, the compiler reports an error. (There’s a loophole for primitive types, or indeed any types that support bitwise copy, so something like let x = 42; let y = x; doesn’t sacrifice access to x.)

Everybody got that?

There’s a lot more to understand if you want to be a systems programmer, such as volatility and atomicity of variables that are accessed by multiple threads, but this is already a long post for what’s usually passed off as a simple topic. Congratulations on reading this far, and please do leave a comment if you’d care to guide further discussion.

![Photo of a macho dude doing curls and giving us bedroom eyes. Overlaid text says: Bro, do you even [interface inheritance]? Photo of a macho dude doing curls and giving us bedroom eyes. Overlaid text says: Bro, do you even [interface inheritance]?](https://substackcdn.com/image/fetch/$s_!PJ7v!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2F859f68e1-9832-42a4-a4f9-19596cee8be5_2120x1414.jpeg)